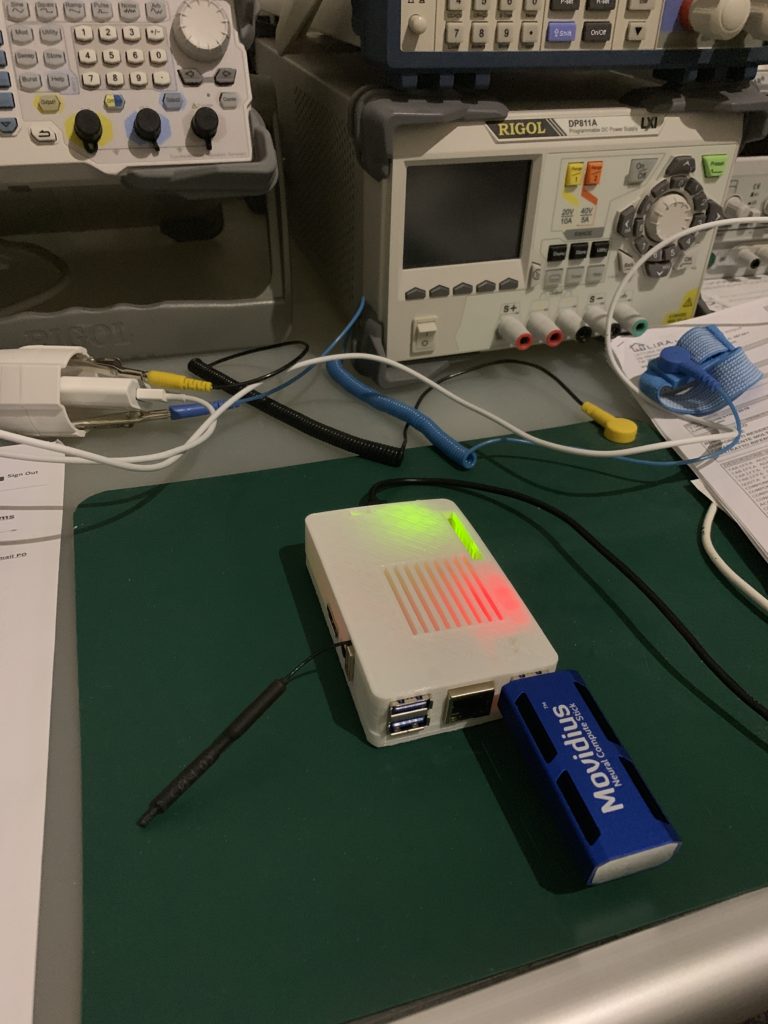

Google released a lineup of Edge TPU coprocessor-equipped devices called “Coral” with the intent of bring intelligence to the IoT devices at the edge.

At this time the devices are two: the Coral Dev Board and the USB Accelerator; the first one is dedicated to develop an entire intelligent node from scratch, but the second one is meant to bring intelligence to an existing system.

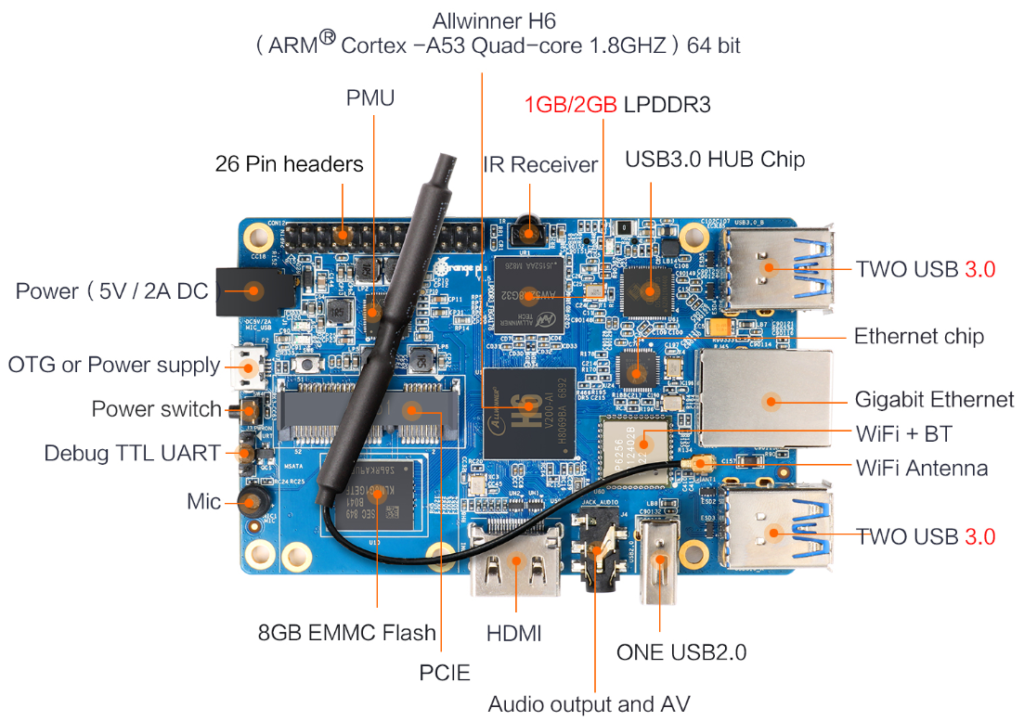

So, the USB Accelerator is the best solution if you have already you project developed and based on a Raspberry Pi, a UP board, a Orange Pi 3, or similar.

In this article, we will see how to implement the Coral USB Accelerator within a Raspberry Pi Zero.

Required hardware

- 1 x Raspberry Pi Zero (W)

- 1 x microSDHC class 6+ / 16+GB

- 1 x microUSB power supply (5V 2000mA/h)

- 1 x Google’s Coral USB Accelerator

Taking for granted you have a Raspberry Pi, this case “Zero” (but this should work as the same with other Raspbian-equipped boards) up and running. If you don’t know how to proceed I kindly suggest to follow the guide HERE.

Note: the Raspberry Pi Zero (W or not) is running on a Broadcom BCM2835 32-bit ARM11 (armv6* family) 76JZF-S processor clocked at 1GHz, single-core SoC equipped with 512MB RAM. Usually, the architecture is quite important detail when we manage hardware projects because we could enter in problem of pre-compilation conflicts with ARM SoCs. For example, if we have “armv7-a” pre-compiled binaries we will not able to work with 64-bit-based “armv8-a” CPUs, and even we have code sources, we could be not able to recompile it for our arch type. So, write down this detail!

Pre-Flight check of the Raspberry platform

We need to be sure that everything is up-to-date and we are both aligned on it. As I don’t need a Desktop environment, I opted for Raspbian Stretch Lite, based on Debian 9, and compiled for armv6l architecture (Note: NO armv7*!).

Open on the terminal (direclty on the Pi board, or via SSH).

pi@raspberrypi:~ $ sudo apt-get update

pi@raspberrypi:~ $ sudo apt-get upgrade

pi@raspberrypi:~ $ uname -aIn my case:

Linux raspberrypi 4.19.42+ #1219 Tue May 14 21:16:38 BST 2019 armv6l GNU/Linux

Our Challenge

It’s time to call our bluff: the Google’s Edge TPU APIs is currently only compatible with the Raspberry Pi 3 (model A+ or B+), or its successors, x86 (32 or 64 bit), or other generic ARM64 platform architectures (“armv7l” or “aarch64)), but not with the 32bit low power, low cost, ARM SoC architecture we have on our Raspberry Pi Zero, or of the Raspberry Pi 1st Gen/2nd Gen.

So, what now? Well, we’ll try to find a workaround! 🙂

The most obvious thing it’s to recompile the Coral Edge TPU library for the “armv6l” but… it’s not so easy, unfortunately!

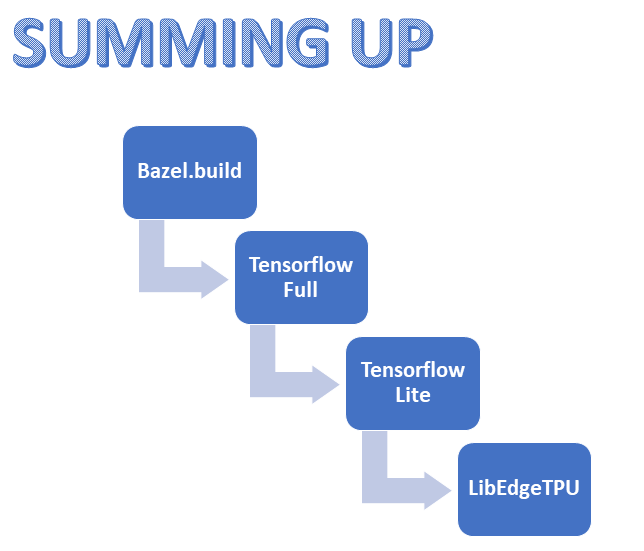

The Edge TPU library is built upon Tensorflow Lite framework, that in turn is compiled on the “full” Tensorflow: so, we need to recompile Tensorflow before. To do that, we’ll need have a full installation of Blaze: a free software tool which allows for the automation of building and testing of software. But Blaze doesn’t support our “armv6l” out-of-the-box, so…

Recompiling BLAZE for “armv6l” architecture

To recompile Blaze we need more than 512 Mb of RAM. Not being able to upgrade the soldered built-in RAM on the board, we can create a digital RAM file with the needed size: it’s called “swap file”.

First of all, create a file which will be used as additional swap:

pi@raspberrypi:~ $ sudo dd if=/dev/zero of=/swapfile bs=1024 count=1048576Set the permissions for the swapfile as only root should be able to write and read the swap file:

pi@raspberrypi:~ $ sudo chmod 600 /swapfileUse the mkswap utility to set up the file as GNU/Linux swap area:

pi@raspberrypi:~ $ sudo mkswap /swapfileActivate the swap file:

pi@raspberrypi:~ $ sudo swapon /swapfileThen, let’s make the change permanent editing the /etc/fstab file:

pi@raspberrypi:~ $ sudo echo '/swapfile swap swap defaults 0 0' | sudo tee -a /etc/fstab > /dev/nullVerify that the swap file is active and working:

pi@raspberrypi:~ $ sudo swapon --showYou should clearly see /swapfile in the output. Or, in alternative:

pi@raspberrypi:~ $ sudo free -hYou should see the additional amount of swap space in the output.

Now, you should adjust the swappiness value: this will force the the kernel to use the swap space more aggressively than usual.

pi@raspberrypi:~ $ sudo echo 'vm.swappiness=100' | sudo tee -a /etc/sysctl.conf > /dev/nullIf you want, now you can reboot.

pi@raspberrypi:~ $ sudo rebootLog back in and install few pre-requisite Debian packages:

pi@raspberrypi:~ $ sudo apt-get install build-essential openjdk-8-jdk python zip unzip git openmpi-bin openmpi-common python-pipIt will also install automatically all the needed dependencies.

Now we can start working over bazel.build recompiling. Let’s download sources in a single and architecture-independent distribution archive from Bazel repository:

pi@raspberrypi:~ $ wget https://github.com/bazelbuild/bazel/releases/download/0.26.1/bazel-0.26.1-dist.zipNow, unpack and join the workspace:

pi@raspberrypi:~ $ unzip bazel-0.26.1-dist.zip -d bazel-dist

pi@raspberrypi:~ $ cd bazel-dist

pi@raspberrypi:~/bazel-dist $ sudo chmod u+w ./* -RAnd compile taking advantage of the additional swap space we created:

pi@raspberrypi:~/bazel-dist $ env EXTRA_BAZEL_ARGS="--host_javabase=@local_jdk//:jdk" BAZEL_JAVAC_OPTS="-J-Xmx512M -J-Xms512M" bash ./compile.shThis task could require several hours.

The compiled output is placed into output/bazel. For convenience we will copy this binary to /usr/local/bin (a directory that’s on your PATH).

pi@raspberrypi:~/bazel-dist $ sudo cp output/bazel /usr/local/bin/bazelInstalling requirements for Python/PIP

As it’s always better to not use the Python packages manager (or packages index, a.k.a. pip) directly, let’s prepare a virtual environment to use a workspace.

pi@raspberrypi:~/bazel-dist $ cd ..

pi@raspberrypi:~ $ sudo pip install virtualenv

pi@raspberrypi:~ $ virtualenv tensorflowenvNow we will operate directly into the virtual environment we just created.

pi@raspberrypi:~ $ source tensorflowenv/bin/activateAnd using pip we are going to install all the python package requirements we need:

(tensorflowenv) pi@raspberrypi:~ $ pip install -U pip six numpy wheel setuptools mock future>=0.17.1

(tensorflowenv) pi@raspberrypi:~ $ pip install keras_applications==1.0.6 --no-deps

(tensorflowenv) pi@raspberrypi:~ $ pip install keras_preprocessing==1.0.5 --no-depsRecompiling Tensorflow for “armv6l” architecture

Download a copy of the latest stable release of Tensorflow (in this specific case is the version 1.12.2):

(tensorflowenv) pi@raspberrypi:~ $ git clone https://github.com/tensorflow/tensorflow.git

(tensorflowenv) pi@raspberrypi:~ $ cd tensorflow

(tensorflowenv) pi@raspberrypi:~ $ git checkout r1.12Configure your system build by running:

(tensorflowenv) pi@raspberrypi:~/tensorflow $ ./configureUse bazel to make the TensorFlow package builder with CPU-only support:

(tensorflowenv) pi@raspberrypi:~/tensorflow $ bazel build And, taking advantage of this workspace, also the Tensorflow Python package:

(tensorflowenv) pi@raspberrypi:~/tensorflow $ bazel build --config=opt //tensorflow/tools/pip_package:build_pip_packageGetting started with the Coral USB Accelerator

As already briefly advanced before, the Google’s Coral USB Accelerator contains a Google Tensorflow (Co-)Processing Unit (a.k.a. TPU) and through its USB 3.0 Type-C socket can be connected to your (linux-based) development board.

What does it mean? The onboard Edge TPU allows to perform high performance machine/deep learning (convolution neural network) inferencing tasks without having a performing GPU.

To get started, perform the following steps:

pi@raspberrypi:~ $ cd

pi@raspberrypi:~ $ git clone https://coral.googlesource.com/edgetpu-native --branch release-chef

pi@raspberrypi:~ $ cd

pi@raspberrypi:~ $ wget https://dl.google.com/coral/edgetpu_api/edgetpu_api_latest.tar.gz -O edgetpu_api.tar.gz --trust-server-names

pi@raspberrypi:~ $ tar xzf edgetpu_api.tar.gz

pi@raspberrypi:~ $ cd edgetpu_apiNow, lets inject the Tensorflow binaries we need into the edgetpu_api folder.

pi@raspberrypi:~/edgetpu_api $ bash ./install.shOk, that’s all for this first part. I hope you found this quick guide usefull. I am planning to continue with the second/final part of the article in the following weeks. I’ll work as well to add a final look setup and design for my project using the board and the TPU unit.

Further Readings/References

About Coral USB Accelerator:

- USB Accelerator datasheet

- Get started with the USB Accelerator

- Tensorflow Lite: Python API overview & demos

- Coral Libedgetpu git repository

About Raspberry Pi Zero (W):

- ARM Versions

- ARM11 – Microarchitectures – ARM

- RPF Raspberry Pi Zero

- Raspberry Pi Zero (W) nice case to 3D-print on Thingiverse.com by geertvda

Tensorflow:

Miscellaneous:

- Create a Linux Swap File

- Compiling Bazel from source

- bazelbuild/bazel releases repository on Github

- Building TensorFlow 1.3.0-rc1 for Raspberry Pi/Ubuntu 16.04: a Step-By-Step Guide

Last Update: 2019, the August 2nd, Friday